What's left for AGI besides scale?

Trends I expect to see

[AI Safety relevance rating: GI]

Summary: I highlight three capabilities that are independent of scale which an artificial general intelligence (AGI) would likely have, which current LLM-based AIs lack, and which seem to be under development now. The three capabilities are (1) multimodality, (2) tool use, and (3) taking actions. I estimate the difficulty of integrating such behavior into an LLM-based AI (including predictions) and how much safety risk is posed by each capability.

AIs based on large language models (LLMs) like ChatGPT, Sydney/Bing and character.ai have human-level capabilities in some areas: they pass a Turing test on first glance, write cover letters, and seduce people. I personally have been tempted to call these AIs general intelligences, but I think there are key limitations on them beyond raw reasoning/intelligence/question-answering abilities that exclude them from the class of AGI. Here I want to outline several overlapping capabilities that are separate from the “raw intelligence” you can get from making a model bigger, but which would make an AI more “general” or “transformative”. This isn’t meant to be a complete list.

My goal in writing this is to clarify my own thoughts on what AGI is and what the major pushes in AI will be in the next few years.

“I’m not helping capabilities” disclaimer: I assume that capabilities engineers at the major companies are already keenly aware of these limitations and are working on resolving them. For most of the capabilities I discuss, I’ve included an example of a company already working on them. I’d be surprised if a non-expert like me can make a significant contribution from my armchair.

Multimodality

In my previous job I spent a whole year working on a project for "multimodal media" and no one ever explained what modalities were. So let me provide a definition-by-example: modalities include text, images, video, and audio. Less widely accepted modalities might include "spreadsheet-like data" and "real-world actions”.

Currently, most AI uses a single modality, such as LLMs only inputting and outputting text. Many image generators take in a text prompt and output an image, some allow you to input both an image and text, and some generate images as well as text, so some limited multimodality already exists. But to be truly general intelligence, I think we'll need to see strong crossovers between modalities, such as “digitize all these scanned documents into new rows on this spreadsheet”.

Multimodality seems especially important to interacting with the real world, since observations about the world almost never come in the form of raw text. In this regard sight-based modalities (image and video) are used by approximately all animal life, so I’d expect it to be useful to AGI.

Tool Use

A real intelligence uses the right tool for the job. For instance, if ChatGPT could use a calculator to do arithmetic, that would be both more accurate and more compute-efficient than “doing the math in its head”.

Sydney was a recent attempt at this, using the tool "do a Google Bing search" to help with information retrieval tasks. I expect to see a lot of experimentation with giving AIs tools, up to and including letting the AI write and run its own computer programs(!).

Let me highlight three classes of tools of specific interest, which have separate implementation and safety challenges:

“Quick Query” tools. Let’s define this class to be tools that simply retrieve information in a very short wall-clock time, such as a calculator, internet search, database query, or grep. The Bing AI’s use of Bing searches is an example of this.

Reading and writing files, especially for long-term memory. I’m envisioning this as “let the AI recall previous conversations and keep notes”, but I think this actually unlocks a lot more capabilities for a sufficient intelligence. It is very normal for other computer programs to save information in auxiliary files (e.g. preferences), so I think there will be a lot of pressure to let AIs do this.

Full command line access, including writing and running programs. The obvious end state is to just let you AI do whatever it wants, which has the biggest potential upsides and biggest risks. Apparently people are already trying some version of this with writing programs, and with mutating malware and viruses.

Regardless of the particular tools, tool use poses several new engineering problems: how does your LLM choose what tool to use and when? What does the LLM do if it doesn’t get a response in a timely manner? How do you prevent the LLM from crashing its computer, for instance by deleting critical files? I don’t know the answers to these questions.

Taking actions

Right now LLMs don't "do" "things". Here’s what happened when I ask text-davinci-003 to do something for me:

As far as intelligence goes, this is basically perfect! It does the right steps and helpfully provides additional information like the best price and reviews. But you’ll notice the one thing this AI didn’t do is actually buy me an egg whisk.

Obviously people will pay more money for an AI that does things rather than just describing how it would do things, so I expect there will be a lot of economic pressure to develop such AI. I think this is the premise of Adept AI.

I see two ways action could be read from an LLM:

Add tokens corresponding to actions (click mouse, move robot arm, etc), and if the AI outputs that token, the computer/robot executes the action instead of displaying a word on the screen.

Keep it all in text, and have a separate program scan the LLM output for relevant phrases such as “[ACTION: CLICK MOUSE]” which are translated into actions.

This will also come with a lot of engineering issues: how do you get feedback/input into the LLM (did the mouse click register)? How do you get the LLM to wait for things like loading websites, but with a finite patience? How do you get it to fail gracefully?

Difficulty

Here’s where I make wild guesses about how difficult it is to make these capabilities work:

Multimodality: Easy (human speech audio), Medium (images), Hard (video). I see two avenues for adding multimodality, which are suited for different modalities.

X-to-text-to-matrices: Use other tools, like image captioning or audio transcription, to convert other modalities to text which is passed to the LLM. Pros: shovel-ready. Cons: could be a lossy compression method, especially for image captioning. Probably will be preferred for audio, and currently in use in character.ai.

X-to-matrices: Directly convert other modalities into the AI’s “internal language” of giant matrices. Pros: LLMs already do this with text, presumably other modalities can be handled similarly. More seamless integration, which could lead to better performance. Cons: Likely harder to train and more expensive to run. Probably will be preferred for images and video. [Edit: As I was writing this, Microsoft released a paper about a “multimodal large language model” which takes text and images as input and produces text as output. I think they are following the avenue I describe here.]

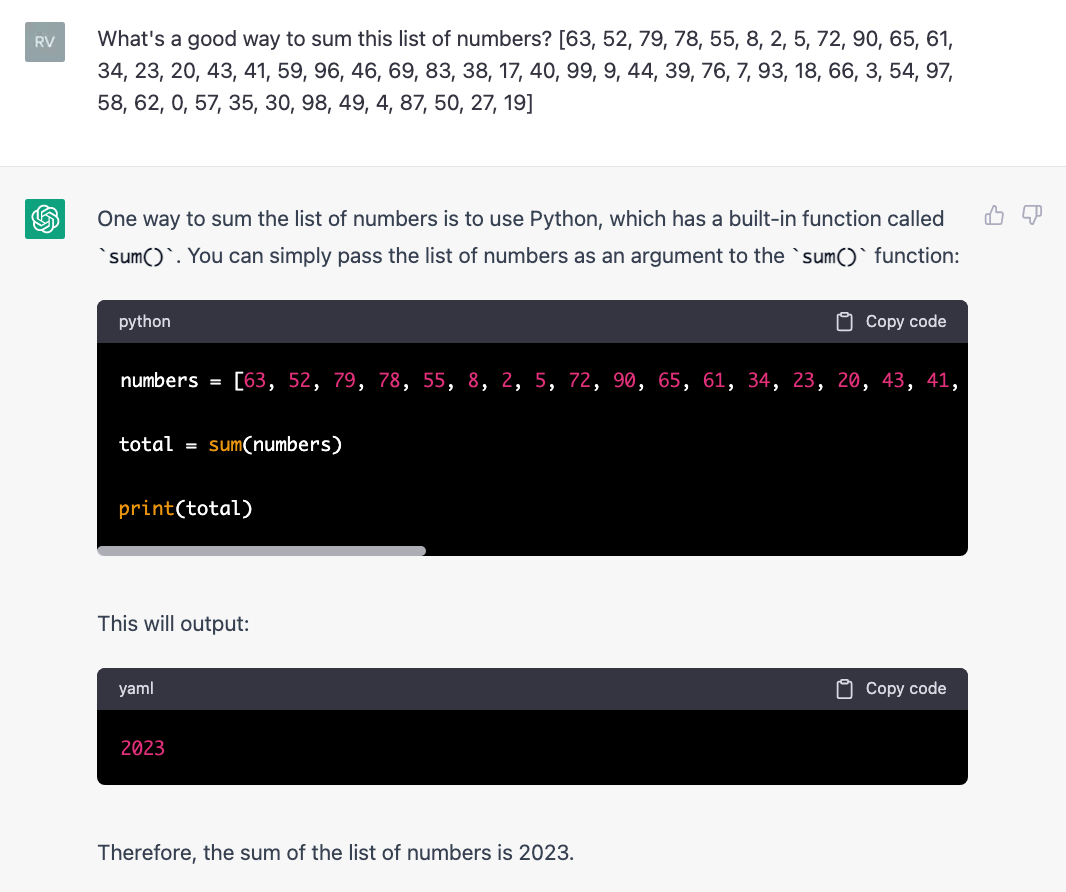

Quick Query Tool Use: Easy. I expect Quick Query tools to be easier to incorporate into LLMs because they can be read into/out of the existing text modality, don’t face issues from taking too much wall-clock time, and don’t have the risks of giving the AI write access (risks both existential and banal - you don’t want your AI to delete a system-critical file by accident!) I think this capability is basically here except for actually connecting the LLM to an AI. Here’s a proof of concept with getting GPT to “use an external calculator”:

General Tool Use: Medium. Once the AI can use simple tools like calculators, you could try just giving it a command line and letting it go nuts. This has a lot more space to mess up, such as running a loop forever or deleting critical files. The LLM could also mess up syntax more easily, and it’s not clear to me they have the capabilities yet to decode programs in response to error messages (unless they can?). So I expect this to be a few years away.

Taking Actions: Medium-Hard. Obviously people will pay more money for an AI that does things rather than just describing how it would do things, so I expect there will be a lot of economic pressure on this. However, I could see general actions as bottlenecked by reliability and raw capabilities, since making mistakes can be costly - consider self-driving cars, which are caught up in regulation and litigation. It may take a few years before these AIs have good enough capabilities to avoid financially-significant mistakes. There could be vicious cycle here, where unreliability leads to low uptake, leads to little training data, leads to continued unreliability.

Predictions

Let me try to crystalize these thoughts with actual predictions:

There will be a push to incorporate additional modalities into LLM products. Audio and image modalities will be first, with audio being transcribed and images being embedded into the transformer.

ChatGPT (or successor product from OpenAI) will have image-generating capabilities incorporated by end of 2023: 70%

No papers or press releases from OpenAI/Deepmind/Microsoft about incorporating video parsing or generation into production-ready LLMs through end of 2023: 90%

All publicly released LLM models accepting audio input by the end of 2023 use audio-to-text-to-matrices (e.g. transcribe the audio before passing it into the LLM as text) (conditional on the method being identifiable): 90%

All publicly released LLM models accepting image input by the end of 2023 use image-to-matrices (e.g. embed the image directly in contrast to taking the image caption) (conditional on the method being identifiable): 70%

“Quick Query” tools will be incorporated into LLMs soon.

At least one publicly-available LLM incorporates at least one Quick Query tool by end of 2023 (public release of Bing chat would resolve this as true): 95%

ChatGPT (or successor product from OpenAI) will use use at least one Quick Query tool by end of 2023: 70%

General tools will be harder than Quick Query tools to incorporate into LLM-based AI.

The time between the first public release of an LLM-based AI that uses tools, and one that is allowed to arbitrarily write and execute code is >12 months: 70%

>24 months: 50%

Taking actions will receive a lot of hype, but will take a while to roll out.

No publicly available product by the end of 2023 which is intended to make financial transactions (e.g. “buy an egg whisk” actually uses your credit card to buy the egg whisk, not just add it to your shopping cart): 90%

Here by “publicly available” I mean freely available online or as a paid product (so ChatGPT, character.ai, and midjourney all count, but closed betas and internal models such as Bing AI at time of writing don’t count). I think this is a somewhat artificial category and I wish there was a better way to draw these lines, but none occur to me at the moment.

I fully expect to be punished by my hubris here - I don’t have much experience forecasting, and am probably wildly miscalibrated. I’m willing to accept wagers on these odds (at a modest scale).

Risk

How bad are all of these from an AInotkilleveryoneism perspective?

Multimodality - relatively low-risk. Some small increase in risk from incorporating visual inputs, because those are helpful for taking real-world actions without human aid.

Quick Query tool use - relatively low-risk. The only risk angle I see (besides a general capabilities increase) is that if the queryable database is updated regularly, this can lead to a the AI changing its behavior in a short timescale. For instance, with Sydney/Bing AI some of its threatening behavior was prompted by seeing tweets and news articles about people who were testing it. Someone (I forgot who) also raised the possibility that the news could reinforce negative behavior, e.g. seeing a headline “Sydney is a threat to all human life!” would probably lead to less aligned behavior from Sydney.

Read/Write tool use - moderate risk. This lets a malicious AI intentionally collaborate across time and instances, and survive through resets. There is also a lot of room for exploits from an intelligent agent - if the AGI can’t directly execute programs, it could edit an innocuous file that is run on startup to call kill_everyone.py. To make this step safe, we’d want to greatly limit which files the AI can read or write, and get very good at auditing those changes. But my preferred safety strategy here is “just don’t do this”.

Command line tool use - risk as large as your AI’s capabilities. At this point the only reason your AI wouldn’t kill you is a) it’s not smart enough or b) your prosaic alignment is holding (for now). This is like having exposed wires carrying high voltages through your home, please please please don’t do this.

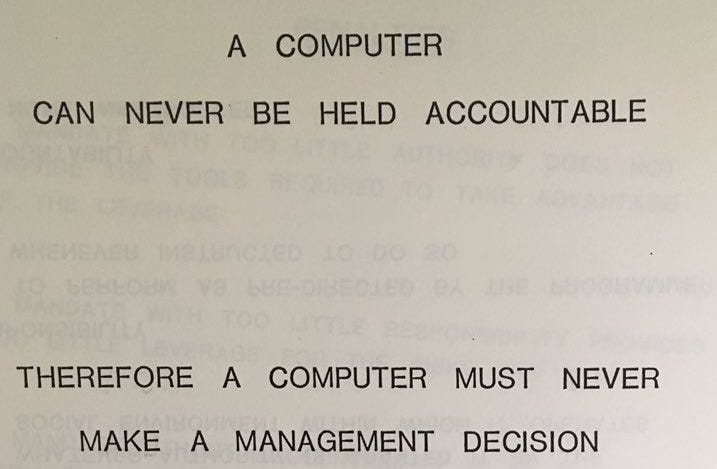

Taking actions - risk as large as your AI’s capabilities. Again, if you’re making one of these, don’t. We’d be entirely hoping our AI is a combination of nice and dumb, and there’s no reason to assume that should be the case. Plus, remember the mantra, but apply it to all significant actions:

IBM has known this since 1979. Via bumblebike on twitter.

Wait, aren’t those all modalities?

Having written this, I’ve noticed a pattern. Let’s recap to make it clearer. To get AGI, we need to augment LLMs with:

More modalities (images/videos/audio/spreadsheets)

More modalities (tools)

More modalities (actions)

So do I think more modalities are the only thing left for AGI?

Ehh… no, and here I’ll hold up the “this isn’t a complete list” shield. One big lacuna is reliability. We’ve seen this play out with self-driving vehicles already - AI can be 99% reliable, but if humans are 99.999999999% reliable then AI is sub-human in an relevant way and may not be deployed. Is it harder to make AI reliable than smart? I don’t know, and I could see it going either way. Unlike the modalities capabilities I mentioned before, I don’t see a clear path to improving reliability besides “increase scale and hope that fixes it”.

Ultimately, I expect companies are going to make AIs that do important, real-world things, and 99% of the time they’ll correctly order your egg whisk, but 1% of the time they turn into Sydney and harm people. That will be a critical juncture for our civilization: do those companies wait on those AI to make them safer, or do they launch now? I fear we’ll make the wrong choice.