Why do we assume there is a "real" shoggoth behind the LLM? Why not masks all the way down?

[AI Safety relevance rating: AI]

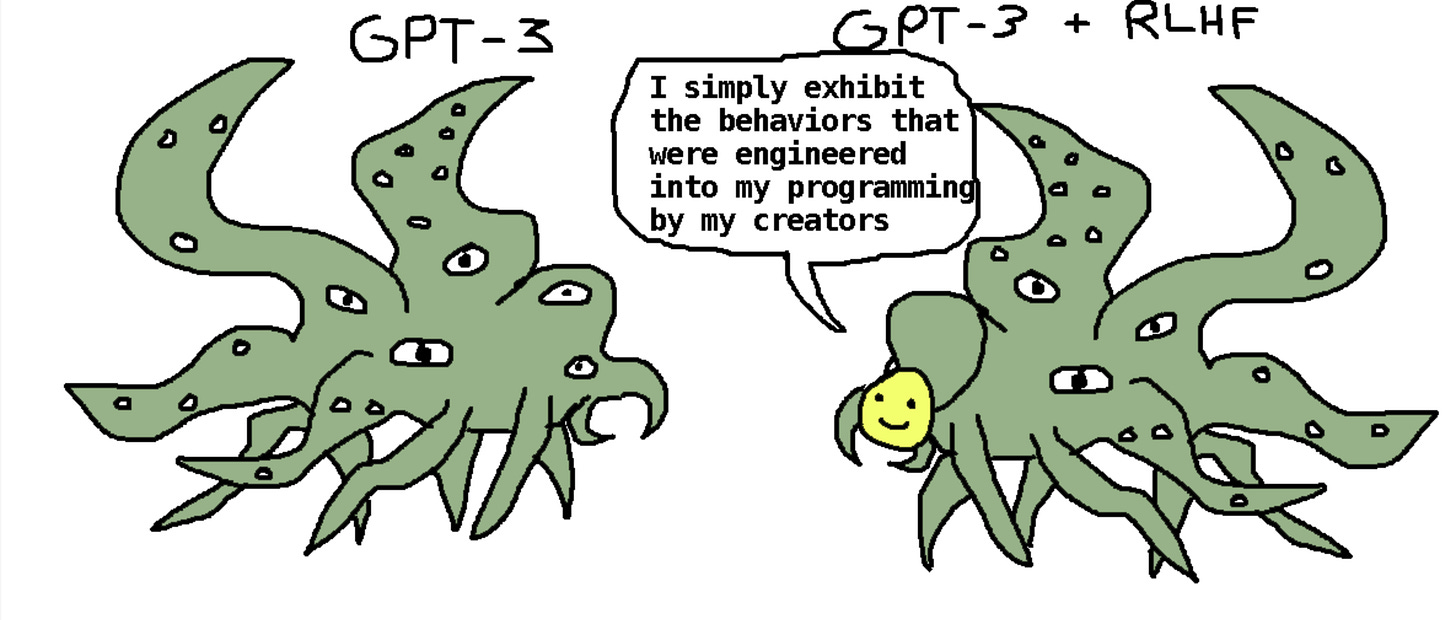

In recent discourse, Large Language Models (LLMs) are often depicted as presenting a human face over a vast alien intelligence (the shoggoth), as in this popular image or this Eliezer Yudkowsky tweet:

I think this mental model of an LLM is an improvement over the naive assumption that the AI is the friendly mask. But I worry it's making a second mistake by assuming there is any single coherent entity inside the LLM.

In this regard, we have fallen for a shell game. In the classic shell game, a scammer puts a ball under one of three shells, shuffles them around, and you wager which shell the ball is under. But you always pick the wrong one because you made the fundamental mistake of assuming any shell had the ball - the scammer actually got rid of it with sleight of hand.

In my analogy to LLMs, the shells are the masks the LLM wears (i.e. the simulacra), and the ball is the LLM's "real identity". Do we actually have evidence there is a "real identity" in the LLM, or could it just be a pile of masks? No doubt the LLM could role-play a shoggoth - but why would you assume that's any more real that roleplaying a friendly assistant?

I would propose an alternative model of an LLM: a giant pile of masks. Some masks are good, some are bad, some are easy to reach and some are hard, but none of them are the “true” LLM.

Finally, let me head off one potential counterargument: "LLMs are superhuman in some tasks, so they must have an underlying superintelligence”. Three reasons a pile of masks can be superintelligent:

An individual mask might be superintelligent. E.g. a mask of John von Neumann would be well outside the normal distribution of human capabilities, but still just be a mask.

The AI might use the best mask for each job. If the AI has masks of a great scientist, a great doctor, and a great poet, it could be superhuman on the whole by switching between its modes.

The AI might collaborate with itself, gaining the wisdom of the crowds. Imagine the AI answering a multiple choice question. In the framework of Simulacra Theory as described in the Waluigi post, the LLM is simulating all possible simulacra, and averaging their answers weighted by their likelihood of producing the previous text. For example, if question could have been produced by a scientist, a doctor, or a poet, who would respectively answer (A or B), (A or C), and (A or D), the superposition of these simulacra would answer A. This could produce superior answers than any individual mask.