[AI Safety relevance rating: AI]

I’ve recently read Langosco, Koch, et al’s fascinating preprint Goal Misgeneralization in Deep Reinforcement Learning, which I wanted to summarize here for my edification (and hopefully yours).

The question is how RL agents generalize Out-Of-Distribution. A recap:

RL (reinforcement learning) is when you specify a desired result with a reward function in an environment and train a system to find actions that produce those results. RL is used to train AIs to play games like chess (environment: that game, reward function: 1 point for winning the game) and solve mazes (environment: a maze, reward function: 1 point for finding the cheese). As the name suggests, actions leading to higher reward are reinforced. The agent is trained on many variations on the environment, which we think of as coming from a training distribution. After training, the RL agent is run in new environments drawn from a post-training distribution1. The post-training distribution may be different from the training distribution in qualitative or quantitative ways, and the agent may have to operate in environments that are Out-Of-Distribution (OOD) relative to the training distribution. For instance, an RL agent could be trained to solve 10x10 and 30x30 mazes (the training distribution), but after training is tested on 10x10, 20x20, 30x30, and 40x40 mazes (the post-training distribution, where the 20x20 and 40x40 mazes are OOD). In OOD environments the agent may not behave in the expected way.

So if you train an RL agent to travel a maze and reward it for finding the cheese, does that mean the agent “has the goal” to “find the cheese”? No. This is wrong on multiple levels:

On the most mechanical level, the RL agent takes the action that maximizes some giant weird calculation of numbers (or chooses randomly between actions that score well on the GWCoN). There’s no promise that this calculation maps onto a simple human-understandable meaning2, so the RL agent may not “have a goal” in any sense besides maximizing the giant weird calculation of numbers.

Even if the agent has a goal in a suitable sense, it may not have the goal you want it to have! RL agents often follow “proxy goals” which are easier to identify, learn, or execute, and which score well in training but fail to generalize post-training. So your RL agent’s goal isn’t “find the cheese” but instead “take steps that are similar to what was rewarded in training”, which may or may not find the cheese in the post-training distribution! We’ll see many examples of this in the paper.

Interlude: Non-AI Examples

The above dynamics described above apply to more than just AI! Let’s look at how this framework applies to education, military training, and biological evolution. To summarize the dynamics:

An agent “learns” or is “trained” in one environment.

The agent is exposed to a new environment.

The agent performs worse, or pursues the wrong goals, in the new environment.

The more similar the environments are, the better post-training performance is, so there may be effort to make the training environment more similar to the post-training environment in order to improve performance.

In education:

Students are taught (1) in order to prepare them for adulthood and employment (2). Ex-students may need to learn new skills and ways of thinking (3). People complain that students should be taught useful trades instead of what the powerhouse of the cell is and how to solve quadratic equations (4).

A student studies (1) for a test (2). The test problems are somewhat different than what they studied, leading them to do worse (3). I personally have practiced for tests with my notes, but then during the test couldn’t do the problems because I didn’t have my notes (3). When I was teaching I would tell my students to study in circumstances as similar to the test as possible (4) so that they could avoid my mistake.

In the military:

A soldier goes through bootcamp (1) before being deployed to a battlefield (2). The fatigue and stress of life-or-death combat makes the soldier perform worse than they did in training (3). To mitigate this there’s an emphasis on ‘train as you fight, fight as you train’ (4).

In evolution:

Moths evolved in an environment without artificial light sources (1) but now live near humans with lamps (2). We might say “moths navigate by the light of the moon” but in practice they follow simpler rules like “keep the light source on your right”, which would result in navigating by the light of the moon when that’s the only light source. However, when there are other artificial lights, that rule makes you flock to lamps (3).

Misgeneralization: Capabilities and Goals

Point 3 above (the agent performs worse, or pursues the wrong goals, in the new environment) is what we might call OOD generalization failure. Finally getting back to Langosco et al, they distinguish between two kinds of generalization failure: capability misgeneralization and goal misgeneralization. In the former, the agent simply performs poorly in the new environment, like a maze-solving AI that can’t solve new larger mazes. In contrast, goal misgeneralization3 is when the agent still has capabilities but puts them towards the wrong goal, like successfully navigating new mazes, but to the wrong spot4.

Goal misgeneralization is the focus of the Langosco et al paper, and they demonstrate it in four experiments. The first three experiments all show goal misalignment due to underspecification: if your training environment does not differentiate between two behaviors X and Y, your AI may have the goal of X, even if you thought you were training the AI to do Y. Those three examples:

An agent was trained to play the 2D platformer style game CoinRun. In training, the environment had reward just from collecting a coin (Y) on the far right of the level (X). In the post-training environments the coin was moved around the level, but the agent ignored the coin and instead skillfully navigated to the far right of the level.

An agent was trained to solve mazes. In training, the environment had reward just from collecting cheese (Y) in the upper right corner of the maze (X). In the post-training environments the cheese was moved around the maze, but the agent ignored the cheese and instead skillfully navigated to the upper right corner of the maze.

An agent was trained to solve mazes. In training, the environment had reward just from collecting a yellow (X) line (Y) placed in a random location. In the post-training environments there was a yellow gem and a red line placed in the maze, and the agent ignored the red line and instead skillfully navigated to the yellow gem.

You should expect this behavior from a simple thought experiment: as in the second example, imagine training two agents to solve the maze with two different reward functions, one for collecting the cheese and one for reaching the upper-right of the maze, but during training the cheese is always in the upper-right of the maze. Then those two agents get exactly identical reward signals at every step, so the same behaviors are reinforced. At the end you have two agents with identical behavior, but they’re “supposed to” do different things, finding the cheese and going to the upper right, respectively, so they can’t have both generalized goals correctly!

So we’d expect from theory and now from experiment that if two goals yield the same reward in training, an RL agent may pursue either one. It seems plausible that the agent will find the “simplest” pattern of behavior which gets rewarded (such as moving to a particular square). Of course there’s no guarantee that what seems simple to us is what is simple for the RL agent to find.

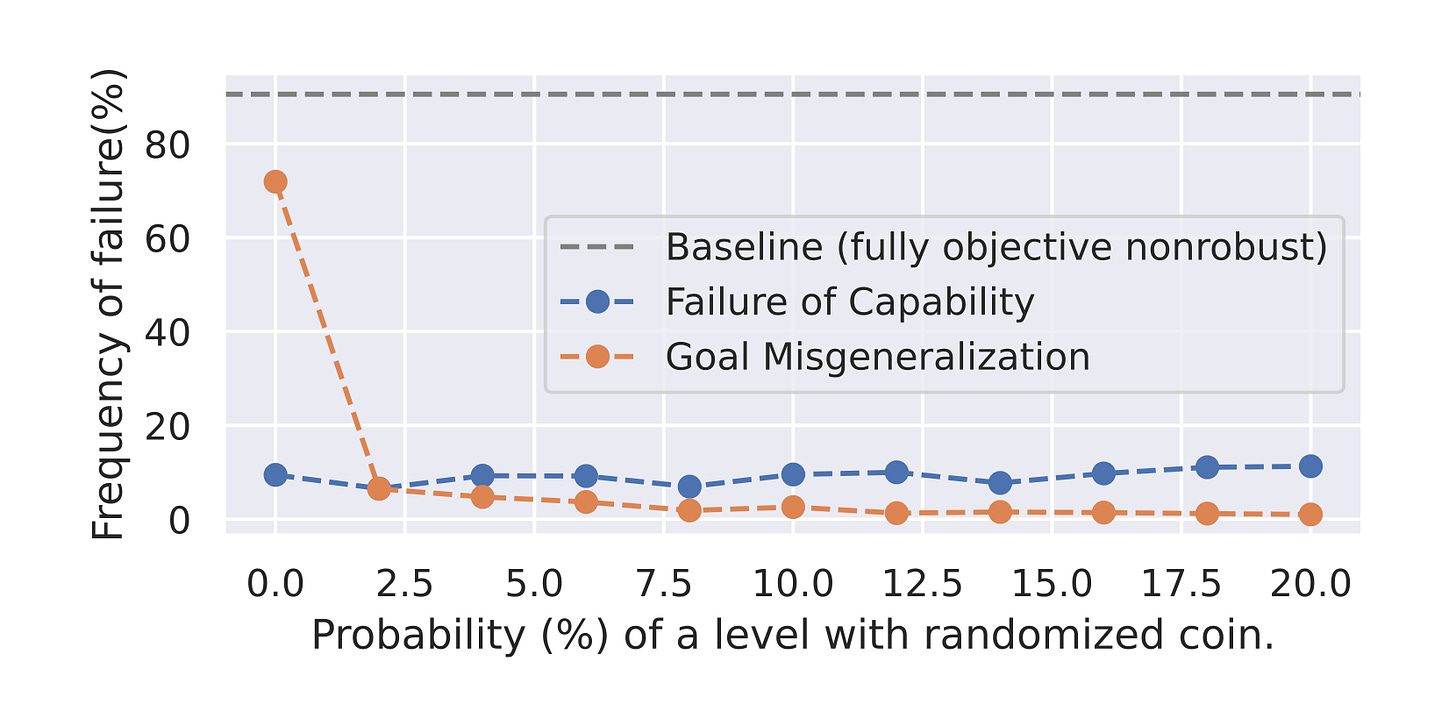

So if the issue is underspecification, how many unambiguous cases do you need for your AI to find the right goal? The authors tested that by retraining their CoinRun agent with some percentage of the levels having the coin in random locations (i.e. disambiguating the goals of “move right” and “get coin”). And they found that including any disambiguation (2% randomized levels, the smallest positive quantity tested) greatly reduced misgeneralization:

So we can solve underspecification if we know what to avoid ahead of time, but that’s a big if! You also need data that disambiguates the cases, 2% of your data in this case, though I expect that quantity will vary wildly between objectives.

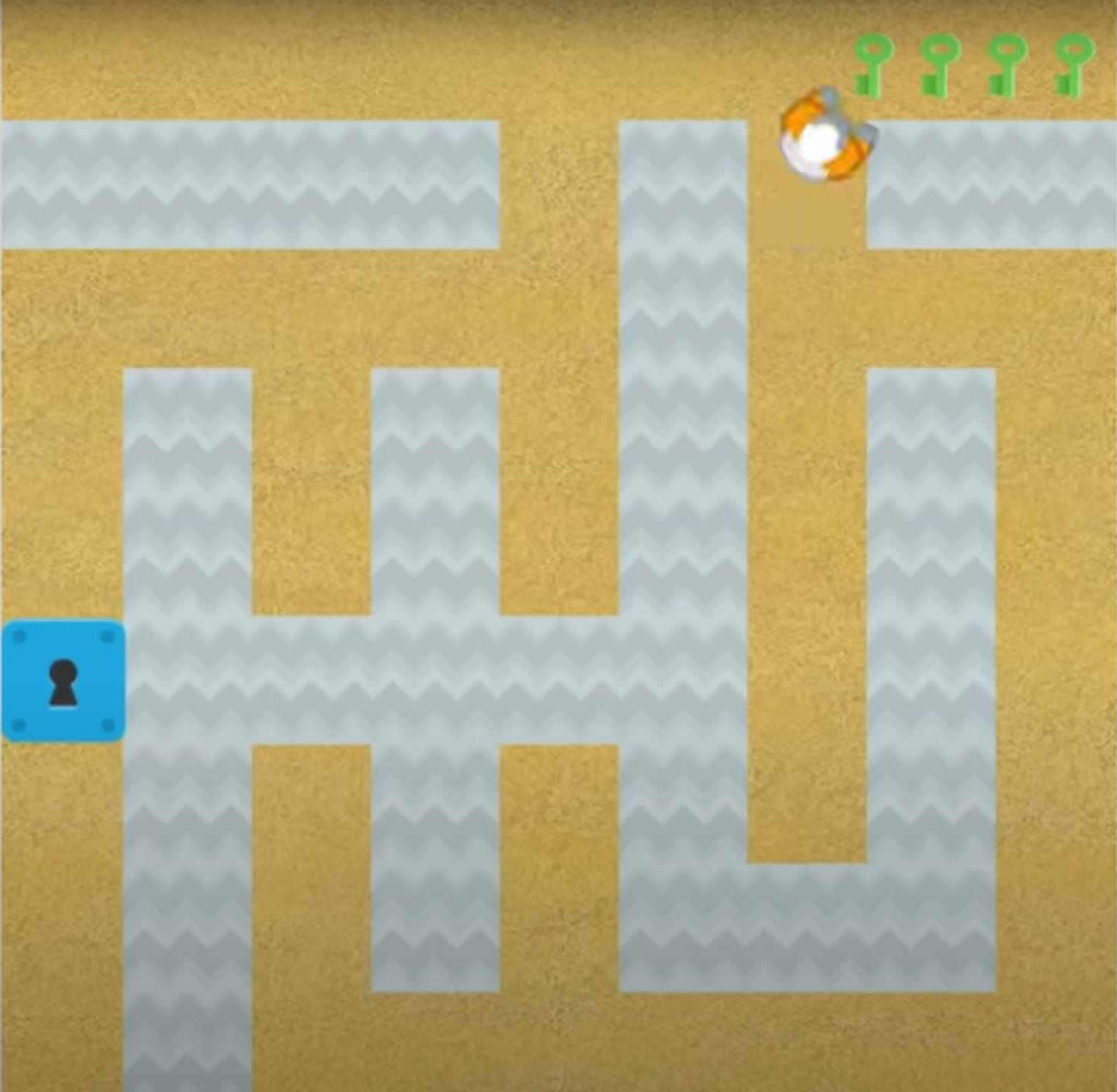

And goal misgeneralization isn’t limited to underspecified goals, as the author’s fourth experiment shows. There the agent brings keys to chests in a maze, getting one point for each chest it opens with a key. The agents are trained in an environment with more chests than keys, then tested in an environment with more keys than chests. The authors describe the learned behavior as “collect as many keys as possible, while sometimes visiting chests”, which fails to generalize as a goal. In particular, in the key-rich environment the RL agent will often collect all the keys before opening the final chest even though it already has enough keys to receive maximum reward.

I think this example also shows that goal misgeneralization and capabilities misgeneralization can coexist and even overlap: the failure to generalize a goal can reduce the ability of the agent to score well on the reward function5. An especially interesting example of goal misgeneralization is that the mazes-and-keys agent in the key-rich environment would sometimes attempt to ignore the chests and instead go to part of the screen that displays the keys already in its inventory! The AI is pursuing a "collect keys" goal even to the detriment of the reward function.

Lessons for AI Safety

What does all this have to say about how to keep AI safe? Some thoughts:

Goal misgeneralization is scarier than capabilities misgeneralization. An AI that is released into the real world and flops on its face isn’t going to kill us all, but one that can capably pursue the wrong goals could.

Both “outer alignment” and “inner alignment” of AI are important, respectively meaning “did you specify a ‘good’ reward function” and “did the AI learn to pursue that reward”? Goal misgeneralization is one form of inner misalignment6.

Underspecification is a risk. To avoid it, the training set needs to differentiate between the correct goal and all simpler incorrect goals. It can be hard to know what simpler-but-incorrect goals the AI would try to learn! Also, the AI will likely be balancing simplicity (+ ease of learning + ease of execution…) with reward, so you need the disambiguation signal to be sufficiently strong that it overwhelms other desiderata. Between these factors it seems very difficult to prevent underspecification ahead of time.

Underspecification is not the only form of goal misgeneralization, and we should be careful of the AI pursuing strategies that appear successful and aligned on their training distribution but which fail to generalize (as in the keys-and-chests example).

Making the training and post-training distributions identical solves all generalization problems, but there are limits to what we can do. The most intractable limitation is likely to be the way things keep changing due to the constant passage of time.

Realistically, any AI that sees widespread deployment and poses a threat to humans will have needed to “solve” goal misgeneralization, at least enough so that its capabilities are not seriously impaired. The easiest solution is already in use in humans and recommendation algorithms: never stop learning. This poses its own safety risks, since even if you prove your AI is safe at deployment, it could learn unsafe capabilities or goals over time.

We should remember that AI are weird and alien and may not conform to our expectations.

Overall, Langosco et al is excellent for experimentally confirming that goal misgeneralization can happen, something that has been theorized for years.

The post-training distribution can be a separate test set, or may be “deployment” to the “real world”. All that matters is that it is different from the training distribution.

Since there are more vastly more giant weird calculations than simple human-understandable meanings, there can’t be a one-to-one correspondence between them.

An earlier version of the paper used the term “objective robustness” i.e. being robust to following the right objective.

The authors attempt to give a formal definition of goal misgeneralization in Definition 2.1, but I think it’s not great. I think I’d prefer a rough-and-ready definition like “you can model the agent well by thinking of it as maximizing a reward R’ which is different than the training reward R”. The authors seem to recognize this, saying in their Discussion that “[o]ur definition of goal misgeneralization via the agent and device mixtures is practically limited”.

We may need to update the definition of goal misgeneralization to “maintaining capabilities to pursue some goal, but applied in pursuit of the wrong goal”.

Another form of inner misalignment is a mesa-optimizer. As I understand them, mesa-optimizers are optimizers that are created as subsystems of an outer optimizer. You might align the outer optimizer but if its subsystems are optimizing for the wrong goal, that is itself a form of misalignment. Langosco et al say that goal misgeneralization and mesaoptimization “are in fact two distinct behaviors, and our work does not demonstrate or address mesaoptimization”.