Intro to AI Safety

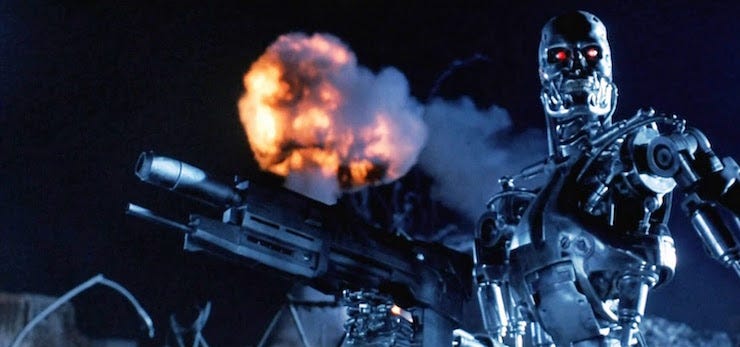

Pictured: What we want to avoid.

[AI safety relevance rating: AI]

This post is a writing exercise for me to condense all my entry-level thoughts on AI safety and make them accessible to anyone not already steeped in AI work. As the saying goes, you don’t truly understand something unless you can explain it to your grandmother, so Joanne if you’re reading then please let me know how I did.

What is AI Safety?

There are two camps of people who want to make AI safe. To summarize each group in a tweet, we first have the "address current harms from AI" camp1:

And in the other corner, the people worried about future AI being extremely dangerous2:

Allegedly, these two groups are “at each other’s throats”, but allow me to be generous to both sides by saying that we should prevent bad things in the present and the future, and that both forms of AI safety are important and can coexist. Imagining the former group as wanting to take a 100% chance to improve things by 10% right now, and the latter group as wanting a 10% chance to improve things by 100% in the future, you can see why reasonable people might want to pursue one or the other.

That said, this blog will focus on the notkilleveryonism form of AI safety.

What AI do we need to be safe from?

Back in the halcyon days of my youth, "AI" meant the computer-controlled enemies in video games3. Nowadays, the term sounds good in marketing, so everything that vaguely involves a computer making a choice gets called AI. But the AI we really want to be safe are ones which are at or beyond a human level of capabilities in many domains. These AI do not exist yet, but I expect to see them during my lifetime. Some commonly used terms for such AI are:

Artificial General Intelligence (AGI): AI that can operate across a wide range of domains (or "all" domains) in the way a human can.

Transformative AI (TAI): Any kind of AI that dramatically changes human civilization, for instance by automating most of the work humans currently do.

Superintelligence: Something whose intelligence ("general ability to accomplish tasks") vastly exceeds humans'. May be an AI or something else (e.g. an enhanced human), but there are reasons to think AI have more potential for superintelligence than humans.

These terms overlap, but not completely. AGI would likely be TAI (unless it were prohibitively expensive to run). In principal we could have TAI before AGI, such as the proposal for a "STEM AI" that can automate science and make decades of progress in years, but lacks other capabilities. Superintelligence would be AGI by definition, and it would almost certainly be transformative (unless we kept it in a box - and good luck with that).

I think we're likely to get TAI, AGI, and superintelligent AI around the same time, because all three seem closer to each other than to current AI systems, and because any one of the three could speed progress towards the others.

But what IS Intelligence?

There can be endless discussions of what intelligence truly is, and I want to sidestep that. For the purposes of AI safety, intelligence means something like "general ability to accomplish tasks, including situations that require planning, prediction, and knowledge of systems". So if someone says "artificial intelligence could kill everyone" and you feel the urge to question if it is truly intelligent, please mentally substitute "an artificial system with capabilities to achieve arbitrary tasks could kill everyone" and so on.

What Could Go Wrong?

Our culture generally welcomes scientific and technological progress, though there are some exceptions. We view technologies like biological and nuclear weapons as increasing the chance of human extinction or civilizational collapse, so we discourage their development.

Development of AGI could be just as dangerous, or more so.

AI could be uniquely catastrophic since it’s the first technology that can pursue runaway goals, self-improve, and can operate independently of humans.4 The baseline case we are worried about is a runaway "paperclipper" AI. Imagine you give an AI a random goal like "make paperclips". The way we currently make AI is to optimize a goal, so it hears "make as many paperclips as possible". Once the AI is smart enough, it realizes that it's always helpful to have more intelligence, computational resources, money, power, etc and so it starts accruing those resources ("instrumental convergence"). If left unchecked, the AI will become arbitrarily powerful and turn the entire planet to paperclips (including you. As Elizier Yudkowsky says, "The AI does not hate you, nor does it love you, but you are made out of atoms which it can use for something else"). If you or humanity as a whole try to stop the AI, then it will take the paperclip-maximizing step of fighting back, and depending on the capabilities of the AI we may lose.

This is bad!

There are many ways to avoid such an outcome, and I'll make a future post running down some of the frontrunning ideas. One prominent idea is to give the AI a goal that we'd be okay with a runaway AI optimizing forever. This is "AI alignment", and the largest results in alignment so far are of the form “alignment is even harder than you’d think”.

But unsafe outcomes of AI are not limited to human extinction. Possible bad outcomes include:

The AI keeps some human-like beings alive in an extreme state (e.g. drugged brains in jars) because we told it to maximize "human happiness" or similar.

The AI is perfectly aligned, but to too small a group of humans, resulting in a dictatorship or oligarchy.

The AI spreads throughout the universe ending all life, beauty, and things of moral value.

So the worst-case scenario of unsafe AI could be far worse than it killing everyone, and there are reasons to think that the “typical” outcome is that it kills everyone.

Okay but will the AI kill everyone?

Here we come to one of the perennial problems of AI safety work: massive uncertainty. At time of writing, AI has not killed everyone [citation needed]. But AI capabilities improve each year, the future is a long time, and there doesn't seem to be any reason that computer intelligences won't eventually be better than a human at most tasks, including tasks that result in humans extinction.

When will AI be powerful enough to pose a significant x-risk? What are the odds that the "goal" of a "randomly chosen" AGI is unaligned enough that we doom humanity? How easily can a malicious AI increase its own power, by becoming more intelligent, copying itself, or commandeering money and computational resources? Is "unaligned seed AI has a rapid takeoff and kills everyone" even the most significant x-risk from AI, as opposed to AI-empowered dictatorship or something else? As the saying goes, predictions are hard, especially if they're about the future, so I don't have answers to these questions.

But a widely accepted answer for "what's the probability an AI kills us all?" is "too high", and one doesn't need a precise answer to try to reduce that chance. That’s currently my answer.

Case Study: Human Evolution

Let’s wrap up by looking at our only example of general intelligence emerging: homo sapiens evolving from our ancestors. Some results of human evolution:

Human intelligence gives us capabilities far exceeding (in several domains) everything else produced by evolution.

Human capabilities are far beyond the comprehension of everything else produced by evolution.

Evolution has lost almost all control over humanity. In particular, there's no way to "turn off human intelligence" if it was somehow displeased and wanted to try again.

Human intelligence went from "on par with other animals" to "vastly exceeding nonhuman capabilities" in a very short time relative to evolution.

Human intelligence has led to the extinction of several species, both accidentally and intentionally.

Human intelligence is not aligned to evolution's goals, in the sense that our goals are largely unrelated to evolutions goals.

This example occupies a prominent role in my thinking. If we create AGI with similar outcomes (swapping "human intelligence -> AGI" and "evolution -> human designers"), humanity would be entirely at the mercy of something far beyond us, with no regard for our values or lives. There’s a lot of work to do to make sure the emergence of AGI ends well for humanity.

I recently saw the term FAccT (Fairness, Accountability, Transparency) for the “address existing harms” camp.

Near-synonyms for notkilleveryoneism include "advanced/strong AI/AGI safety", "AI alignment", and "reducing catastrophic/existential/x-risk from AI". Most of the time, I’ll just refer to it as AI safety.

Such as in this 2004 review of Half-Life 2 https://www.ign.com/articles/2004/11/15/half-life-2-review.

One could argue that some other technologies, like nation-states, corporations, memes, and unintelligent machines have some of these properties, but I think AGI will be the first technology to have all those properties, making it uniquely dangerous.