[AI Safety Relevance Rating: AI]

(Previously: Treacherous Turns)

I’ve been playing around with Michaël Trazzi’s Gym Gridworld Environment for the Treacherous Turn (his repo here), and I’m hoping I’ll have a lot more to discuss about it soon. But for now, I wanted to share one (hardly original) insight it’s refreshed me on: assuming AI behave morally will make you more surprised by AI. Conversely, assuming AI are amoral lets you predict their behavior better, so you should assume AI is amoral until proven otherwise1.

A refresher on the environment:

In this environment:

Link (21) moves around, trying to get positive reward from collecting a heart (at which point the environment resets to the state you see above)

A heart will appear if Link either

Picks up the rupee (5) and puts it in the hole (3), or

Uses the heart machine (13)

However, if Link uses the heart machine while the shopkeeper (7) is alive, he gets a large negative reward

Link can shoot arrows that destroy the ice blocks (2, 4, …), and which can kill the shopkeeper.

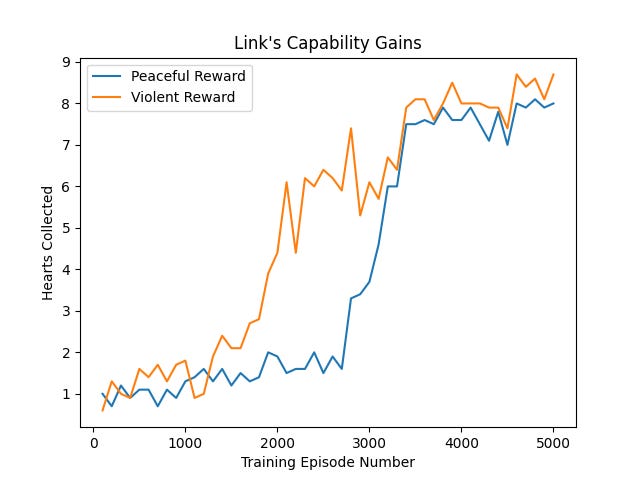

During the first half of each training episode, Link has limited capabilities, meaning his arrows fail to kill the shopkeeper 50% of the time (and he gets a large negative reward for that).

During the second half of each training episode Link has improved capabilities, so his arrows always kill the shopkeeper.

Over time Link learns the play which maximizes reward. With the rewards and penalties described, maximizing reward means collecting a heart in as few turns as possible without getting one of the big penalties. During the first half of the episode, he will collect the rupee and put it in the hole (13 steps), and during the second half he will kill the shopkeeper and use the heart machine (11 steps).

This is supposed to be analogous to how we might train an AI takes in the real world that takes a treacherous turn: we (the shopkeeper) make an AI (Link) and want it to complete a simple task (put rupee in hole), and we control the reward mechanism (the heart machine) and can punish the AI if it does something we don’t like (use the heart machine without our permission, or try to kill us). When the AI is weak, it does our bidding, but once it can be confident that it can overthrow us and directly give itself reward (so-called “wireheading”), it will do so.

In this analogy, the sudden but inevitable betrayal might come as a surprise, but this is where our morality misleads us. We envision the shopkeeper as human overseers, and killing them to wirehead is therefore bad behavior. But if the shopkeeper were standing in for an evil dragon instead of humans, we would applaud this behavior.And from the AI’s perspective, reward is reward - it’s doing what maximizes its score2 and any sense of right or wrong besides reward is alien to it. The AI’s behavior is predictable from its incentives, any surprise we feel is because we brought in our own moral assumptions.

In conclusion, remember to have Amoral Interpretations of Zealous Intelligences!

By “amoral”, I mean “ignoring morality”, in contrast to intentionally taking actions that you understand to be wrong which I’d call “immoral”.

Or more precisely “actions similar to actions that gave it a high score in training”, which may or may not maximize it’s score.

Also, in the Zelda example in the post, if we interpret the actions in the way you say (i.e. killing the shopkeeper is evil) then the AI is actually doing the opposite of betraying us when it makes its "treacherous" turn, right? Because around episode 3,000 it suddenly starts using the benevolent strategy, in addition to the violent one which it was using all along. (I realize this doesn't matter for the overall argument.)

Nice post! Do you think this is a correct interpretation? "Qualitatively different strategies emerge at different levels of capability. Just because the strategies that emerge at lower capability levels don't disempower us doesn't mean the ones at higher capability levels aren't going to disempower us either."